02 Dec 2016

GOV.UK, the UK’s single website for government information and services, is built in the open by a team within the Government Digital Service (GDS). The GOV.UK team is made up of several product, content and support teams that all work together to provide a single service for the public and for civil servants. The GOV.UK team makes their product roadmap available online and posts regular updates via the GOV.UK blog.

Within the GDS offices in Holborn, the GOV.UK product backlog is up on a physical wall. Once a week, all the teams meet around the wall and discuss their progress with their colleagues. The layout of the wall has gone through several interesting iterations over the last few months (even if the interest may be limited to agile geeks!).

The wall at the start of 2016

In the first half of 2016, the product wall looked as described in Neil Williams’s excellent article on Mind the Product:

GOV.UK Wall, 2014 (c) Neil Williams

GOV.UK Wall, 2014 (c) Neil Williams

Each team had their own swim lanes, and the cards were very colourful and detailed.

GOV.UK Wall cards, 2014 (c) Neil Williams

GOV.UK Wall cards, 2014 (c) Neil Williams

The cards were colour-coded, reflected dependencies that the particular had on other work, and unfolding each card would reveal even more detail.

The wall in the summer of 2016

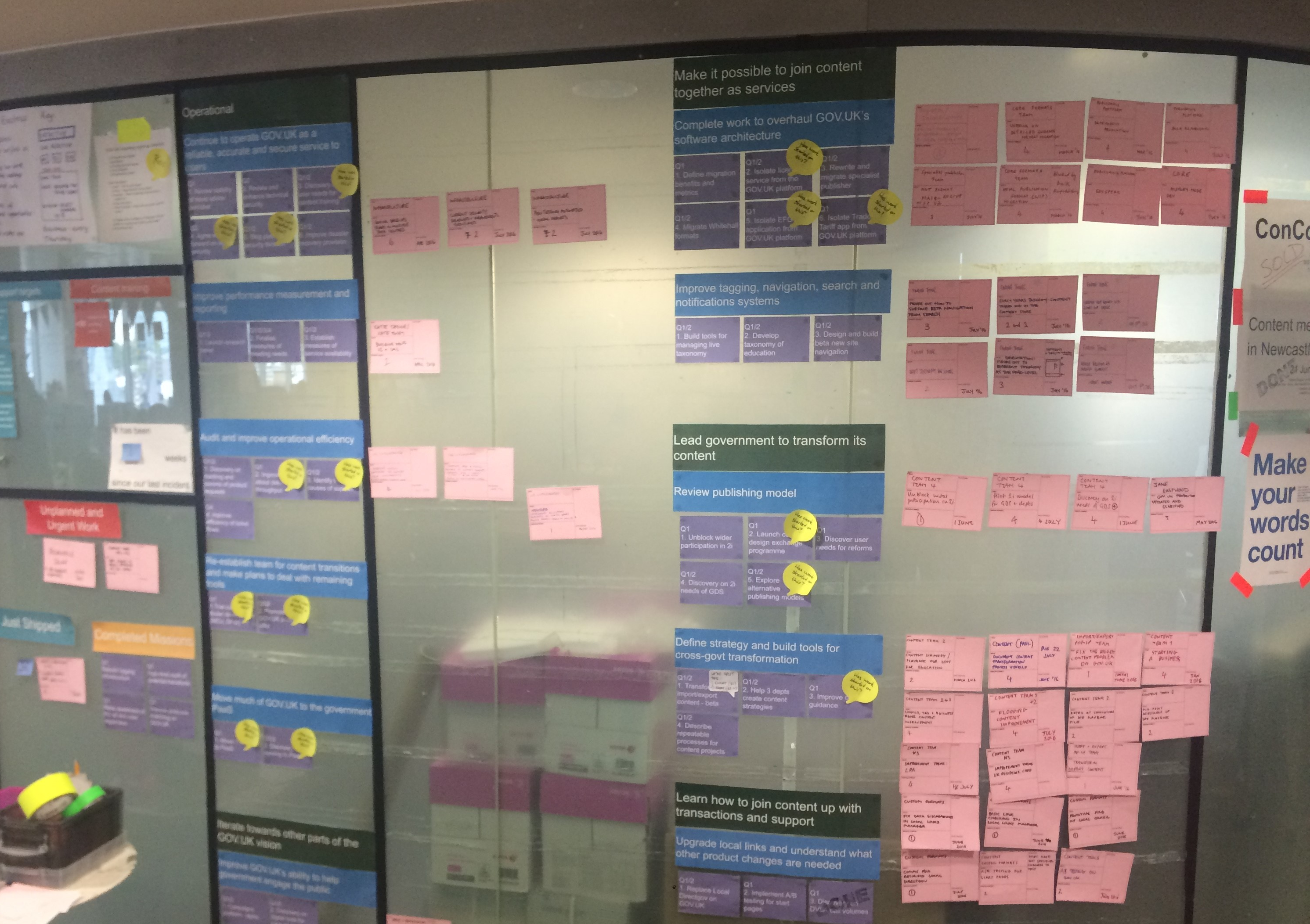

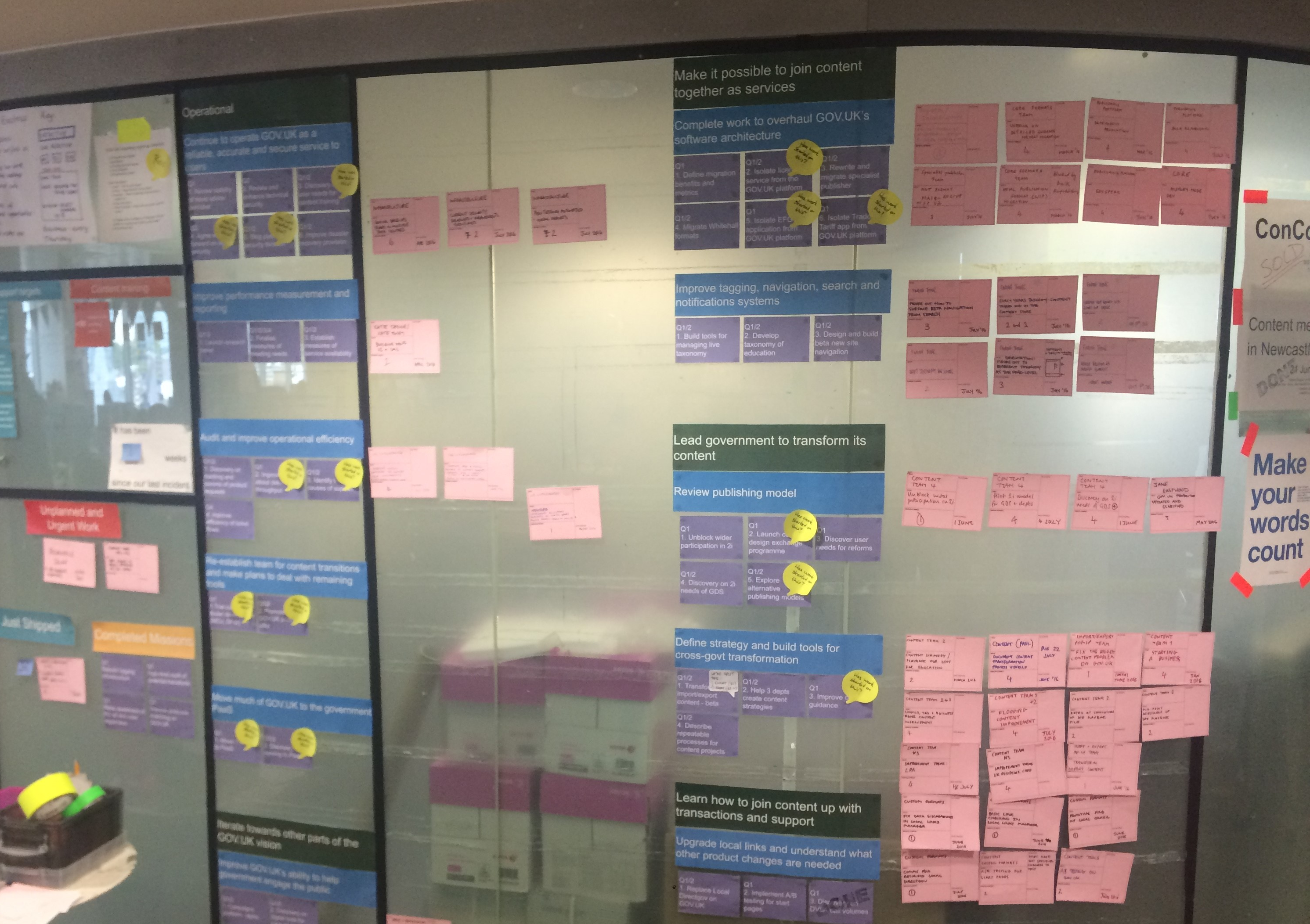

By the summer of 2016, the team had moved away from team swimlanes and re-organised the work according to the GOV.UK objectives.

GOV.UK Wall, summer 2016 (c) Ruth Bucknell

GOV.UK Wall, summer 2016 (c) Ruth Bucknell

Teams had to work out how their epics and stories contributed to wider GOV.UK objectives, and during the wall stand-up, the facilitator would walk through the objectives, rather than the teams. Teams might end up giving updates several times during the stand-up, if their work contributed to multiple objectives.

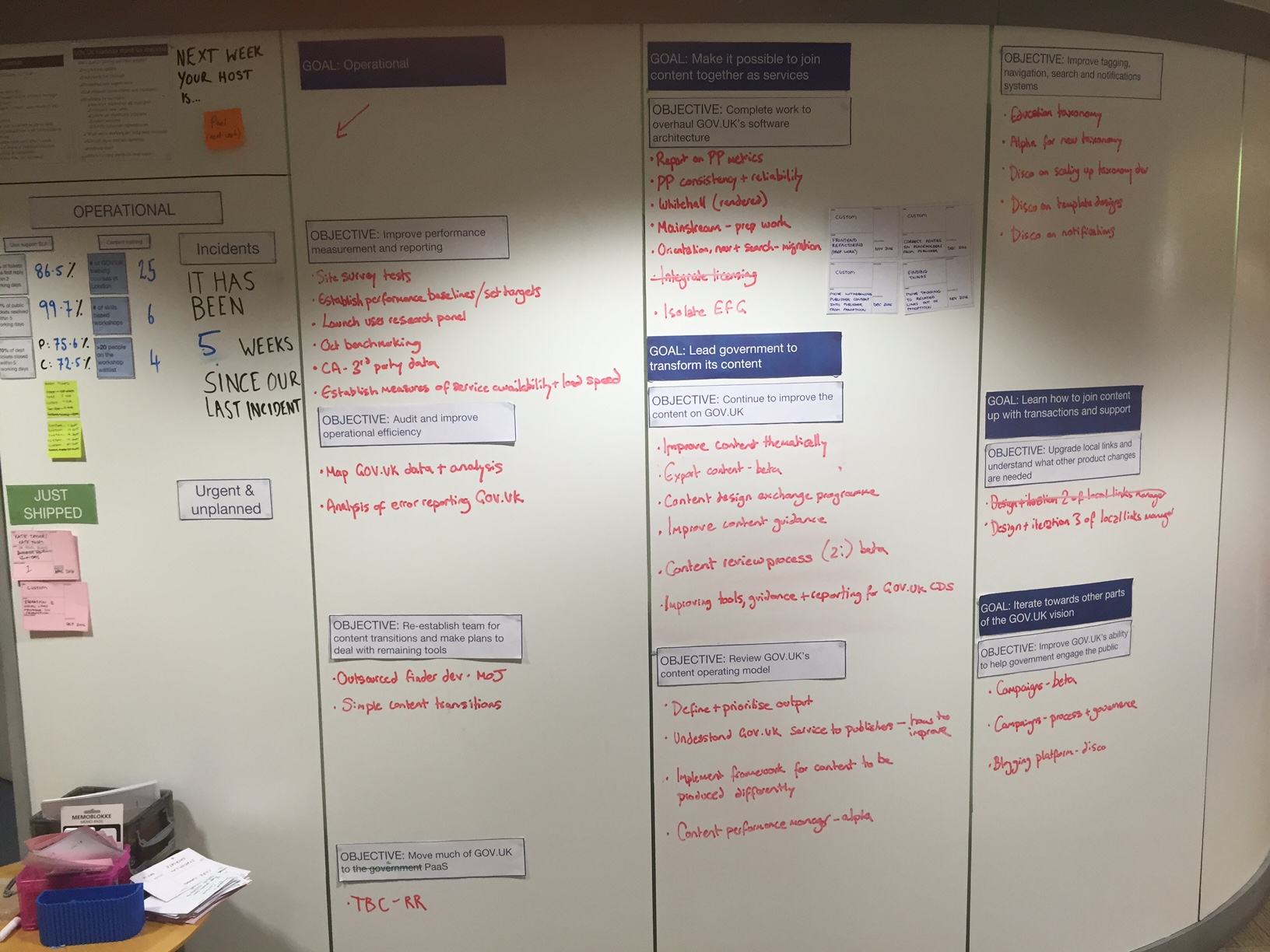

The wall in December 2016

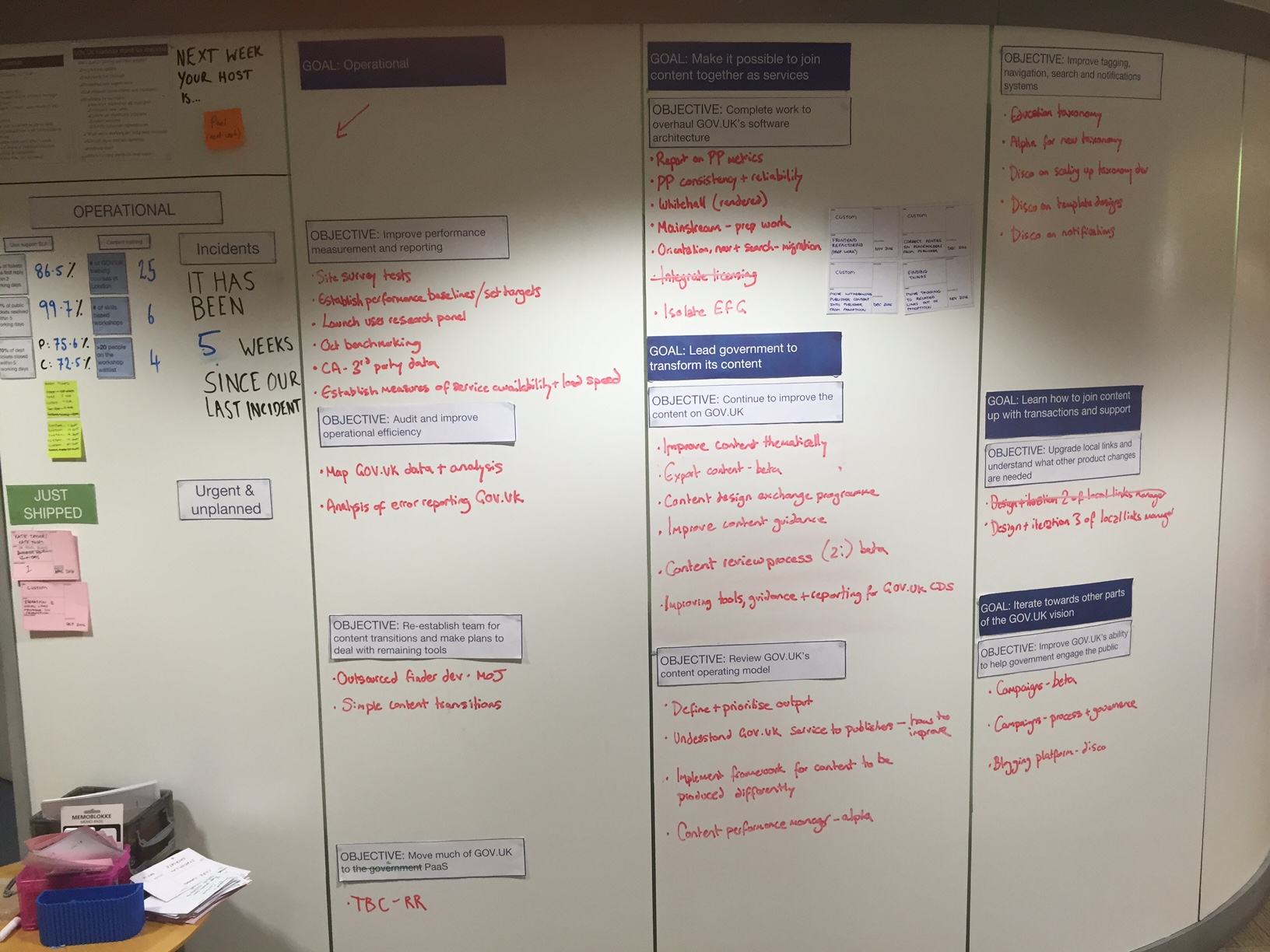

GOV.UK Wall in Dec 2016

GOV.UK Wall in Dec 2016

By December 2016, the wall has been iterated again. This time, the glass pane and printed cards have been replaced with a whiteboard and hand-written entries. All the detail has been stripped away but entries can now be added or changed much easier than before. Getting rid of the cards has meant that the person giving the update now faces their colleagues, as opposed to talking at the card.

24 Nov 2014

Anonymous feedback from GOV.UK’s users has proven immensely useful since the site launched in 2012. This post describes how this feedback is captured and what makes it useful.

Problem reports

Most pages on GOV.UK prompt users to leave anonymous feedback, through the “Is there anything wrong with this page?” form (like the form at the bottom of the VAT rates page). The form captures what the user was doing, and what went wrong for them. This type of anonymous feedback provides both qualitative and quantitative data for the team.

Problem reports as qualitative data

The problem reports that users submit are highly contextual, unfiltered pieces of feedback which document the their frustrations from interacting with that particular content or service. While many reports may not be actionable directly, regularly going through the feedback often triggers insights in the same way that other methods of user research, and should be a regular activity for the product managers, user researchers and business analysts on the team.

Problem reports as quantitative data

When analysing problem reports in aggregate, the page’s contact rate (defined as the number of problem reports on a particular page, relative to the number of page views that that page receives) is the most useful metric. A high contact rate for a page is likely to point to a persistent issue with the content or policy that’s expressed on that page. For a team managing a large number of pages, contact rate can help identify the content areas which require the most urgent improvements.

Feedback on transactions

Some services direct users to leave feedback on a GOV.UK “done” page (like the “done” page for the Register to Vote service). Users are prompted to leave a rating of their experience (between “very dissatisfied” and “very satisfied”) and an optional comment.

The ratings are used to measure the service’s user satisfaction metric and the comments are useful in the same way as the problem report comments.

07 May 2014

GOV.UK was brought up in Parliament yesterday. Some praise, and I liked the challenge laid down to improve the usability.

Lord Leigh of Hurley:

In fact, last week I attempted to put myself in the shoes of such an SME businessman and visited the government website to seek help. As your Lordships may know, the new entry portal for all government help is www.gov.uk and on the very front page there is a link to business. Within two clicks I reached a page that enabled me to read about government-backed support and finance for my imaginary business. This in itself is incredibly impressive. However, unfortunately the clarity ended there. To my horror, the next page offered a choice of 791 different schemes to assist me. Equal prominence was given to the somewhat parochial Barking Enterprise Centre and the Crofting Counties Agricultural Grant Scheme in Elgin. While undoubtedly very worth while, they were given the same prominence as the perhaps more relevant export credit guarantee scheme. There is of course the opportunity to filter down your requirements, and I did then select finance for a business based in London with up to 250 employees in the service sector at the growth stage, and this managed to narrow the schemes down to 42, although surprisingly no filter was offered for people looking specifically for export finance. Accordingly, I would like to suggest providing a very early help button in the government website so that potential SME exporters can have short but direct conversations with experienced UKTI advisers about the route through the maze that is offered to them—because the help is actually there. I believe that this was included in Recommendations 11 and 12 of the Select Committee report, but I have not found any real evidence of their being implemented.

06 Dec 2013

This question has been discussed on the DbFit forums a few times (and very similar discussions have taken place over at the FitNesse forums); here is my 2c on the subject.

Unit tests and executable specifications

DbFit was originally designed as a unit-level testing framework for database code. I (and many others) have also used DbFit (and FitNesse) for writing executable specifications - I believe that the tool is a really good fit for those types of tests.

Now, to maintain quality in large and complex software, those types of tests probably aren’t enough - most teams will want to also regularly run performance and/or production data tests as well. Now, can DbFit be used to run those tests? Sure. Should DbFit be used to run those tests? I’m not so sure.

Technical reasons against

Problems start when you start considering input data. You could choose to insert the data using DbFit (and quickly find that a multi-thousand Insert table is a maintenance nightmare), or find yourself writing custom fixtures that import data from a flat file. Both approaches are very clunky.

If, say, you wish to compare the results of two 100,000 row resultsets with DbFit - not uncommon for production data tests. You might try to do this with the compare stored queries table, but this will probably be horribly slow, if it works at all. The reason for this is the computationally inefficient algorithm that compare stored queries uses for comparison - the algorithm works fine for 10, 100 (and even 1000) rows, but doesn’t scale well beyond that.

Even if the comparison worked, in the case that you get failures, there’s currently no way to filter out matching from non-matching rows, meaning that the user basically has to scroll up and down the results to find the failures - not ideal.

These certainly aren’t insurmountable technical hurdles, and could be fixed with moderate effort within DbFit.

Philosophical reasons against

A tool like DbFit brings a significant amount of complexity (and consequently cost). You have to spend time:

- setting DbFit up as part of the build system

- learning the syntax

- debugging when it doesn’t like the latest environment change

(the list goes on) and that can all eat up a large amount of time.

A “one tool fits all” approach might seem tempting, but here a “best tool for the job” tends to cause less issues in the long-term. If your prod data tests looks like this:

- Identify some production data

- Run the production data against the old version of the system

- Run the production data against the new version of the system

- Manually compare output of the two versions

you are likely to see a better cost/benefit ratio by using a simpler approach to triggering the test runs, comparing data and seeing the differences (eg running steps 2, 3 from a shell script, and using a simple comparison query, again on the command line) than using DbFit.

31 Oct 2013

Good news, everybody - the long-overdue release of DbFit 2.0.0 is finally here. As always, the ZIP archive can be downloaded from the DbFit homepage.

This release is identical to the 2.0.0-RC5 release, which hasn’t had any show-stopping bugs raised against it for several months now; you can learn more about it in the release notes.

Enormous thanks to everyone who has contributed code, created bug reports and supported users on the DbFit Google Group - please keep doing it!

Beyond 2.0.0

I’m aware that there’s a significant number of changes that are sitting unreleased on the master branch - my plan is to release 2.1 with those changes as soon as possible. From 2.0 onwards, we will try to stick to smaller, more frequent releases and adopt semantic versioning, so that it’s clearer how much has changed between the releases.

GOV.UK Wall, 2014 (c) Neil Williams

GOV.UK Wall, 2014 (c) Neil Williams GOV.UK Wall cards, 2014 (c) Neil Williams

GOV.UK Wall cards, 2014 (c) Neil Williams GOV.UK Wall, summer 2016 (c) Ruth Bucknell

GOV.UK Wall, summer 2016 (c) Ruth Bucknell GOV.UK Wall in Dec 2016

GOV.UK Wall in Dec 2016